Exercise 9 - Linear transforms

Task 1

You are given data from two classes with means and covariances

a)

Compute the eigenvectors and eigenvalues of the covariance matrices, and use them to sketch the contours of the covariance matrices in a plot.

b)

Show that the desicion boundary in this case can be expressed as

where is the feature vector.

c)

Plot the resulting desicion boundary (in e.g. python or matlab).

d)

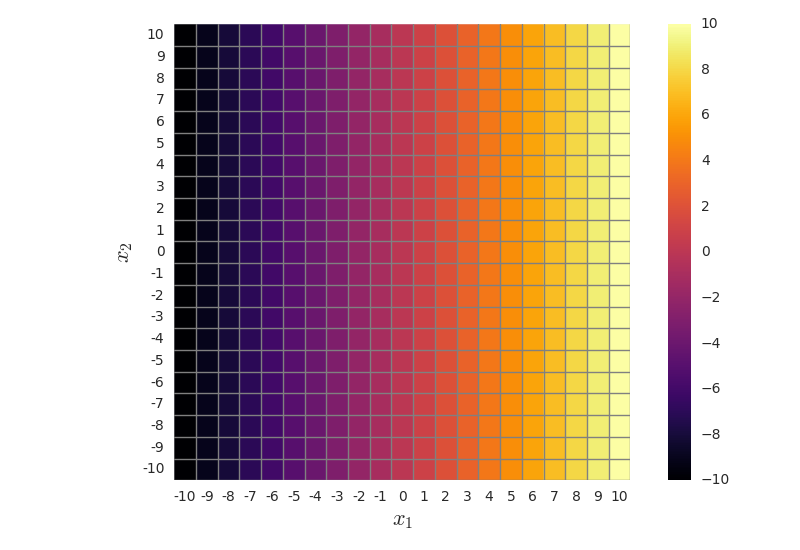

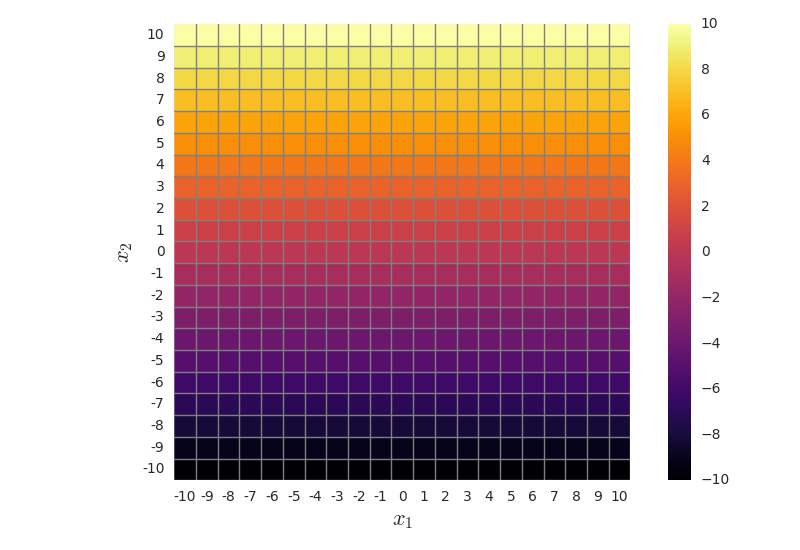

Create a synthetic image with two bands (channels), with samples that span the entire feature space (e.g. from -10 to 10 for both features). For simplicity, let us consider a course grid of samples on integer values . Feature 1 should look like a horizontal ramp from -10 to 10 (inclusive), and feature 2 like a vertical ramp from -10 to 10 (inclusive).

This corresponds to creating feature vectors that span the entire feature space (from -10 to 10). If we later classify all these feature vectors, the resulting classification map should have the same decision boundary as the plot we computed in b) and plotted c). This is just a way to create a visualization of the decision boundary without computing it analytically.

e)

Classify the image, and verify that the shape of the decision boundary you got in c) is the same as you get after classifying the image.

Task 2 - Principal component analysis

In this exercise we will implement and explore linear feature transforms for

feature extraction for images. As in the other classification exercises, we

will work with a 6-band satellite image from Kjeller (tm1.png, …, tm6.png),

with training and test masks (tm_train.png, tm_test.png)

found here.

- Load the images.

- Put all the image data into a matrix of shape (), where is the height of the images, is the width, and we have 6 features. That is, one column for each pixel, where each row correspond to one feature.

- Compute the covariance matrix of this data array.

- Use a built-in routine to compute the eigenvectors and eigenvalues of the covariance matrix.

- Form a matrix which columns are the eigenvectors of the covariance matrix.

- Compute the 6 principal components of the data vector, where the th component at position can be computed as the inner product between the feature vector at position and the th column of : .

- Reshape the principal component data vector back to a 2D image geometry, that is, a image for each of the 6 principal components.

- Display the different principal component images. Looking at them, how many do you think are useful for classification?

- Plot the eigenvalues normalized by the sum of all the eigenvalues.