Object feature extraction - shape descriptors

Image moments

Borrowing nomenclature from probability theory, we can define certain moments based on the intensity value of the image. Moments are useful for computing properties such as centroid and area of an object. We will list the general case, where is a discrete image taking grayscale values.

Raw moments

The raw moments of of order are defined as

From this, we can e.g. compute the centroid

where, in case of a binary image, is the area of the foreground object.

Central moments

Central moments are moments that are centered around the centroid, and thus invariant to translation

With this, we can compute the covariance matrix

from which we can compute eigenvectors. Now, the angle of eigenvector corresponding to the largest eigenvalue is the angle of the major axis of the object, which we can define as the orientation of the object.

We will here derive some of the lower central moments.

Obviously,

For the first order central moments,

and likewise, .

And, finaly for the second order moments

and in a similar manner, .

Normalized central moments

We can in addition to translation invariance obtain scale invariance with normalized central moments

Hu moments

Hu moments are invariant to translation, scale, and rotation, and are stated below for completion.

Example

In this example, we will find the centroid and the direction of a swarm of

birds, or at least of the birds in the image bird_swarm.jpg, which can be

found here.

We first read in the image, and threshold it to get the birds as foreground.

import numpy as np

import matplotlib.pyplot as plt

import cv2

image_file = '../../bird_swarm.jpg' # Change this to your location

img = cv2.imread(image_file, cv2.IMREAD_COLOR)

gl_img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

thresh_img = gl_img < 50

plt.imshow(thresh_img, cmap='gray')

plt.xticks([]), plt.yticks([])

plt.show()

Then, we compute the moments, and note that for background and for foreground. Notice also that we use (, ) as in a regular cartesian coordinate convention.

m00 = m01 = m10 = m11 = m20 = m02 = m21 = m12 = 0

height, width = thresh_img.shape

for y in range(height):

for x in range(width):

m00 += thresh_img[y, x]

m10 += x*thresh_img[y, x]

m01 += y*thresh_img[y, x]

m11 += x*y*thresh_img[y, x]

m20 += x*x*thresh_img[y, x]

m02 += y*y*thresh_img[y, x]

m21 += x*x*y*thresh_img[y, x]

m12 += x*y*y*thresh_img[y, x]

Next, we compute the centroid

cx = m10 / m00

cy = m01 / m00

print('Centriod: ({0:.2f}, {1:.2f})'.format(cx, cy))

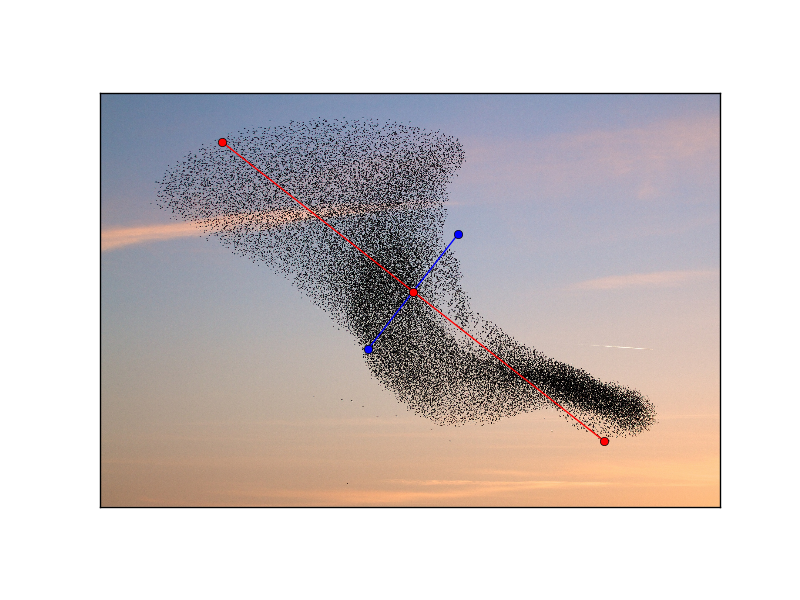

which outputs Centriod: (1034.36, 654.48). Using the central moments above,

we can also compute the angle of the major axis of the swarm, relative to the

horizontal coordinate axis. Note that we use np.arctan2(...) to make our life

easier, quadrant-wise.

mu00 = m00

mu11 = m11 - cx*m01

mu20 = m20 - cx*m10

mu02 = m02 - cy*m01

theta = 1/2*np.arctan2(2*mu11/mu00, (mu20 - mu02)/mu00)

print('Angle {0:.2f}'.format(theta*180/np.pi))

which prints Angle: 38.05. We now plot the major and minor axis (with

arbitrary length) on top of the original image to illustrate the location of

the centriod and the angle.

rho = 800

dx_major = rho*np.cos(theta)

dy_major = rho*np.sin(theta)

dx_minor = 0.3*rho*np.cos(theta - np.pi/2)

dy_minor = 0.3*rho*np.sin(theta - np.pi/2)

plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

plt.plot([cx-dx_minor, cx, cx+dx_minor], [cy-dy_minor, cy, cy+dy_minor], 'bo-')

plt.plot([cx-dx_major, cx, cx+dx_major], [cy-dy_major, cy, cy+dy_major], 'ro-')

plt.xticks([]), plt.yticks([])

# Remove redundant space left by the plt.plot() commands

axes = plt.gca()

axes.set_xlim([0, width])

axes.set_ylim([height, 0])

plt.show()

Region properties

Based on the contour of an object, we will here show how to estimate the area and perimeter of this object. For reference, see e.g. (Yang, Albregtsen, Lønnestad, Grøttum (1994)) Methods to estimate areas and perimeters of blob-like objects: a comparison.

Properties from chain code

For 8-connected chain code, we can approximate the area an perimeter using the following formulas.

Here, is the length of the chain code, and indicates the change in direction of and , respectively, from chain code element . E.g. for , and . and is the number of even and odd chain code elements, and is the number of corners in the chain code. is the position at element (this can be initialized to an arbitrary value as the chain code should be translation invariant).

Freeman

Vossepoel and Smeulders

Kulpa

Properties from bit quads pattern matching

We can also estimate the area and perimeter of an object by matching local windows in an image with some patterns that we will define below. For a given list of patterns and a local window in an image, we match the window to any of the patterns in the pattern list. We do this for all local windows in an image, and accumulate the number of matches in a count variable . Explicitly, if our pattern is , we select a window, beginning with pixels , the next windown will be one pixel to the right, and we continue in this sliding fashion until the whole image is covered. Note that it is important that the region you are examining is filled.

Patterns

The following (list of) patterns is defined:

Single

Horizontal

Vertical

Qadratic 0

Qadratic 1

Qadratic 2

Qadratic 3

Qadratic 4

Qadratic diagonal

Property estimates

Referring to the patterns above, we can estimate the area and perimeter in a number of ways.