Classification I

Classification of images can mean a lot of things, usually it is used in the context of classifying images based on the things in the image, e.g. “this is an image of a dog”, or “this is an image of a cat”.

This note is related to the exercise for this week, where the task is to partition an image according to different classes, also called image segmentation. More formally, the task is to assign a class to every pixel in an image , where is set of classes in which it can belong.

In this note we will cover a univariate Gaussian classifier, utilizing one feature at a time, while multivariate classifiers will be covered later.

Univariate Gaussian classifier

Since this is a univariate classifier, every pixel will be associated with one observable or computable feature, . We will use this feature to determine in which class belongs, or equivivalently, in which class the feature will belong. Let denote the set of features associated with the image such that each will have a unique feature . Note that even though each pixel has an assosiated feature, the value of the feature can be shared by multiple pixels.

Probability model

For each pixel we will model its associated feature as a continuous random variable which range is in . Likewise, we will model the class as a discrete random variable which range is in . For we will have an a priori probability density (or simply prior) which is the probability of labeling with before any features are observed. Likewise, we have the probability density of , , and by total probability, this can be written as

Here, is the joint probability of taking the value and taking the value .

We now define the likelihood which is the probability of taking the value of if it indeed belongs to class . With this, we can now define the a posteriori (or posterior) probability density

Note that the denominator is not dependent on the particular class , and we can view this as simply a normalization factor. This is very convenient, as the true density of can be quite cumbersome to find (or at least express analytically and concise).

This posterior distribution is what we are after. It describes the probability of taking the value given that we have observed . In our case; the probability that pixel , with a corresponding feature , belongs to class .

Discrimination function

In this classification, we will label the pixel with the class which produces the largest posterior probability given the observations . Formally, we define a discrimination function to be the mapping from an observed feature to a class ,

where some equivalent and convenient representation was also included.

Gaussian distribution model

Up until now, we have not said anything about which distributions the random variables belong to, so we will specify them here. We assume that is uniformly distributed over ,

where is the number of classes in . The conditional likelihood will be a univariate Gaussian

where is the feature mean of class and is the feature variance of class . Important: and are the variables that are to be determined when training the classifier.

Note that the determination function is not dependent on the marginal density of , so, as mentioned above, we will not specify it further. Since we have now described our distributions, we can revisit the discrimination function

where the last representation is what is actually implemented.

Training

In order to determine and , we need to have a training set for each class label

This can e.g. be obtained by masking out the pixels of a feature image belonging to the class , for every class in .

We then estimate the class mean as

and the class variance estimate as

where denotes the number of elements in the set. We use hat notation to emphasize that we are computing estimates of the actual class mean and variance.

Dataset partition

When a true classification is available, we can evaluate our proposed classifier against this reference classification, this is called supervised evaluation. On the same note, our classifier is a supervised classifier, since we are training it using a training set of labeled pixels to estimate the parameters in the classifier.

The dataset of labeled pixels is normally partitioned into a training set, a validation set, and a test set. The training set is used to train our classifier. The validation set is used to finetune or adjust hyperparameters, parameters that are a part of the classifier, but not trainable variables. The test set is used to evaluate the classifier.

Normally, the training set is the largest partition. The reason is simply that our classifier usually performs better when trained on a larger training set (up to some reasonable quantity). A classifier should be able to “learn what it is thaught”, meaning that it should perform good on cases it is trained on. But parhaps more importantly, it should generalize well, after all, we want to train a classifier so that we can use it on new examples. Training on a small training set often leaves our classifier overtrained, meaning that it perform well on data from the training set, but poorly on new data. And this is the point of a large training set, with it, we hope that the classifier sees enough examples from a large spectrum, such that it performs well on new data. Note that the data in the training set needs to span a wide variety, a large training set size is of no use if all examples in the training set are identical.

Classification

The procedure for classifying an image is then to classify each pixel by evaluating the function for the feature assosiated with the pixel , and using the mean and covariance estimators obtained from the training.

More verbose, for each , find the associated feature (e.g. obtained from a feature image ). Then compute

for all classes , and label with the class

Evaluation

After you have trained your classifier, that is, computed estimates and , you have your proposed classifier which you can use to classify (in our case) pixels in an image. One can imagine that we are trying out different classifiers, parhaps based on different features or training data, and in this case it is important to have a tool to quantify the success of the different classifiers.

As mentioned above, we evaluate our classifier on the test set partition of our labeled data. It is very important that the training set and test set are independent such that we actually test how the classifier work on new data, as a overtrained classifier would achieve good results on a test set resembling the training set, which is of little practical utility.

In this case it is common to compute a so called confusion matrix such that element is the number of pixels labeled by the reference and labeled by our proposed classifier. Correctly classified pixels will be accumulated on the diagonal, and wrongly labeled off the diagonal.

Basic quantities

From this confusion matrix, we can compute a number of different evaluation metrics, but first, we must define some basic quantities. Let be the total number of pixels in our test set. Let and denote the total number of pixels in the test set labeled with class by the reference classifier, and the proposed classifier, respectively. Conversly, let and be the number of pixels not labeled by the reference and the proposal, respectively.

True positive

For class , we let denote the number of true positive pixels, that is, pixels that is labeled by both the reference and proposal classifier.

False positive

The number of false positive pixels for class is denoted , and it is the number of pixels labeled by the proposal, but not by the reference (here, we do not discriminate between different missclassifications).

where the sum is over all classes except for the class .

False negative

The number of false negative pixels for class is denoted , and is the number of pixels labeled by the reference but not by the proposal classifier.

True negative

The number of true negative pixels for class is denoted . and is the number of pixels not labeled by both the reference and the proposal classifier.

Convenience relations

From the basic quantites above, we can list some sanity checks:

Probabilistic interpretation

If we normalize the confusion matrix, it can be interpreted like an estimate for the joint probability mass function between the reference and proposed classification. Let and be discrete random variables taking values in , and let them model the label given by the reference, and proposal, respectively. And with some abuse of notation, let denote some probability measure, then the following interpretation can be made of the relative quantities defined in the table.

| Quantity | Probabilistic interpretation |

|---|---|

Compound evaluation metrics

Here, we will list some common evaluation metrics used to evaluate classification performance. What metric (or ensamble of different metrics) to use really depend on the application of your classifier, but some general words can be said of each of them.

True positive rate (sensitivity)

Expression:

Probabilistic interpretation:

This measures the fraction of the reference positive you where able to correctly classify. This does not take into account wrong classifications, and a classifier classifying everything to class would achieve perfect sensitivity.

True negative rate (specificity)

Expression:

Probabilistic interpretation:

This measures how well you hit the target, caring only of the missclassified region. A consequence of this is that if your classifier did not classify anything with the label , this classifier would achieve perfect specificity. Also, it is not invariant to prevalence, meaning that increasing the sample size would improve the result.

Positive predictive value (precision)

Expression:

Probabilistic interpretation:

This measures the what proportion of the region the proposed classifier labeles with a class label actually belongs to the class. Or what is the probability of a positive labeled outcome actually is positive. This metric is not independent of prevalence, and increasing the number of reference positive would likely increase the precision (all else equal).

Negative predictive value

Expression:

Probabilistic interpretation:

Conversly, this measures the probability of a proposed negative result actually being negative. But as the above metrics, this one is not independent of prevalence, and you could get a better performance by increasing the sample size.

Rand accuracy

Expression:

Probabilistic interpretation:

A often used metric that takes into account both true positives and true negatives. This is also not independent of prevalence, and adds little information in terms of evaluating a classifier. Classifying rare instances would automatically achieve high accuracy, a property which is somewhat undesirable for a classifier evaluator.

Jaccard index (intersection over union)

Expression:

Probabilistic interpretation:

A classifier often used in evaluation of segmentation methods. This is invariant to sample size, but does not take into account the correctly negative labeled outcomes. Depending on the application, this is not as hopeless as the metrics discussed above.

Dice similarity coefficient

Expression:

Probabilistic interpretation:

Essentially the same as the Jaccard index, but measures the fraction “number of true positive” over the “mean number classified by the reference and proposal” in stead of over the “number classified by the reference and proposal in union”.

Area under ROC

Expression:

Probabilistic interpretation:

This measures the area under the receiver operating characteristic curve, and as can be seen from the expression, is an average between the sensitivity and the specificity. Hence, it inherits the properties of those metrics, which was discussed above. Contrary to the metrics above, a value of 0.5 represent the result of a classifier classifying at random, and thus completely useless.

Development plot

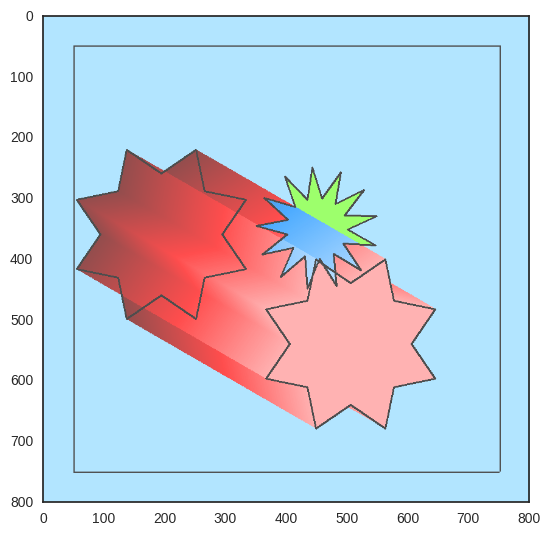

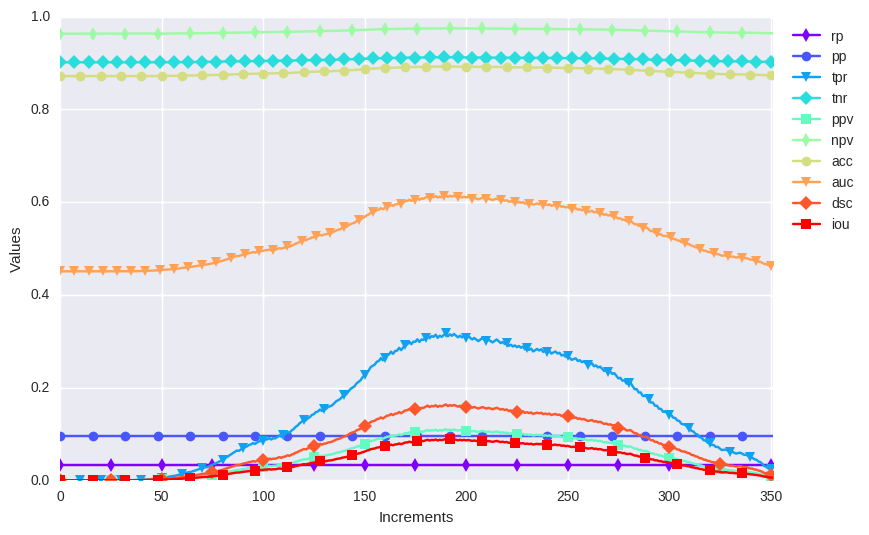

Here is a toy example to illustrate how the different metrics discussed above changes when a proposal star object is “passing through” a reference star object (from dark to bright colors).