Solution proposal - exercise 9

Task 1

a)

The eigenvalues , of the covariance matrices can be computed from

for

The left hand side for class 1 is

therefore we get , and as eigenvalues for class 1.

For class 2, we compute it similarly, and end up with .

The corresponding eigenvectors are found by solving the system

Which for class 1 gives us and corresponding to eigenvalues and , respectively. Note that any vector proportional to the mentioned are valid eigenvectors, but it is convenient to show the ones with unit length. For class 2 we have a diagonal covariance matrix with only one eigenvalue, this means that the eigenvectors are and , as for class 1.

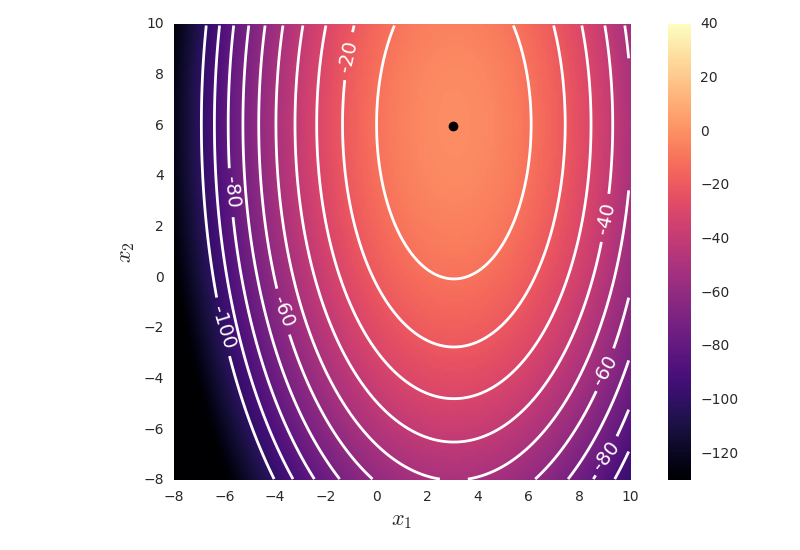

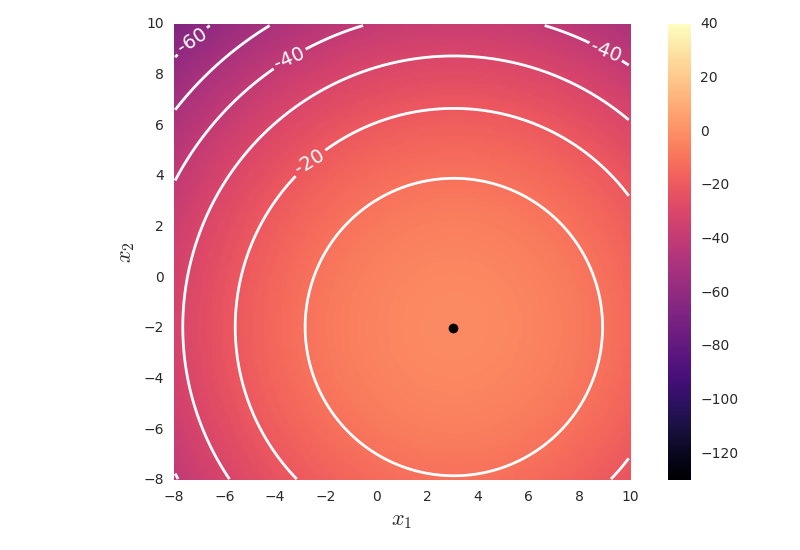

From this we can draw the contours of the covariance matrices

b)

If we take the log posterior as the discrimination function (where we assume equal prior probability, and have removed the independent constants),

we get, for class 1

and for class 2

We get the equation for the decision boundary by setting , which results in

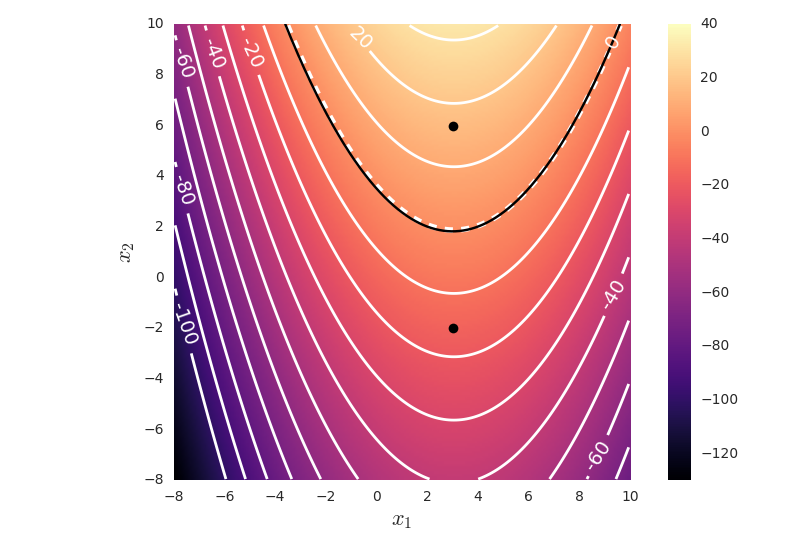

c)

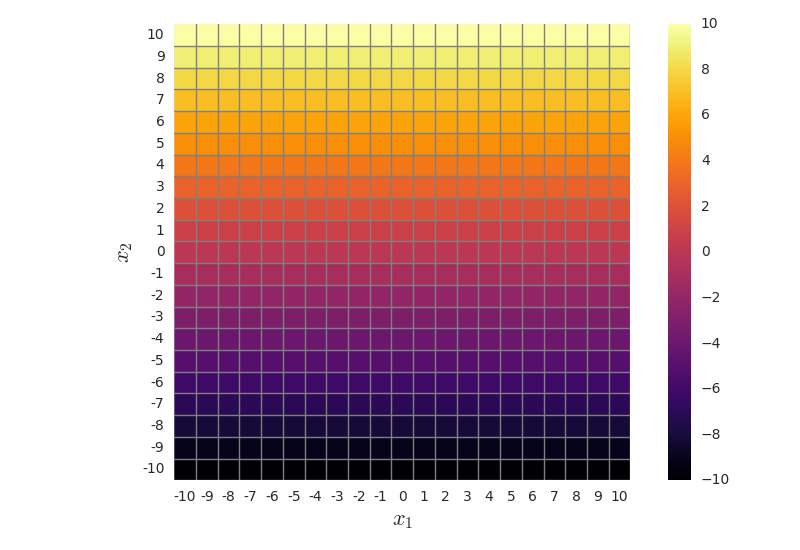

Here, we show the graph of the decision boundary on top of the graph of the difference between the unnormalized log posteriors of the classes. We see that all points where the graph is zero is on the decision boundary, all points with a positive difference value belongs to class 1, and all points with a negative difference value belongs to class 2.

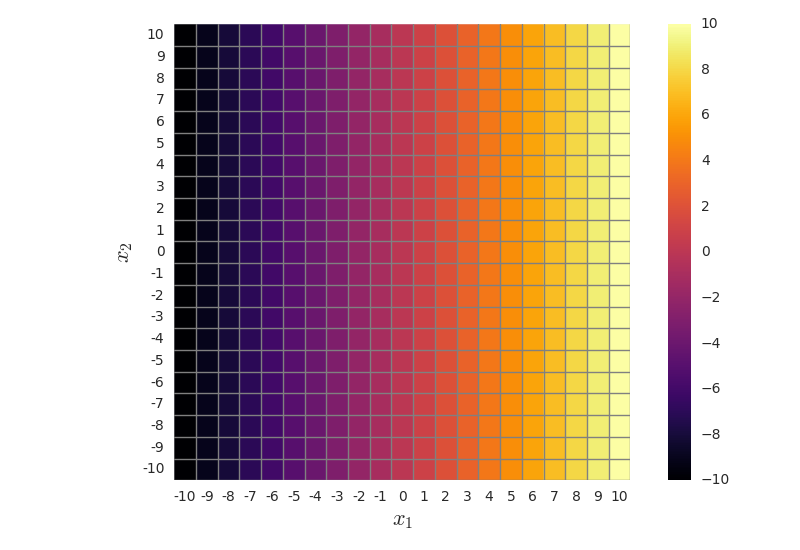

d)

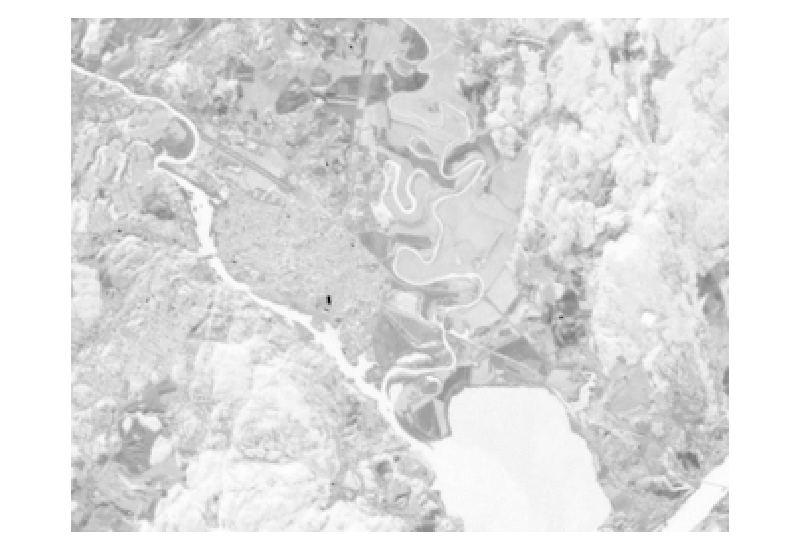

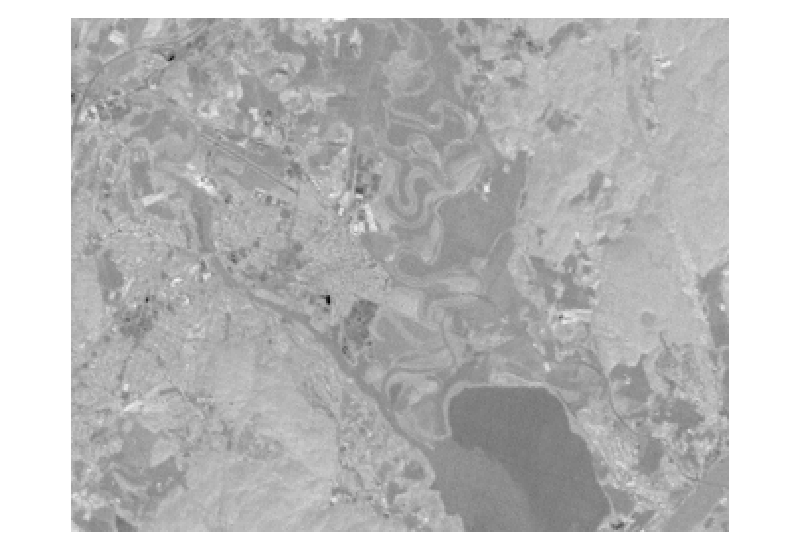

The feature images looks like this

e)

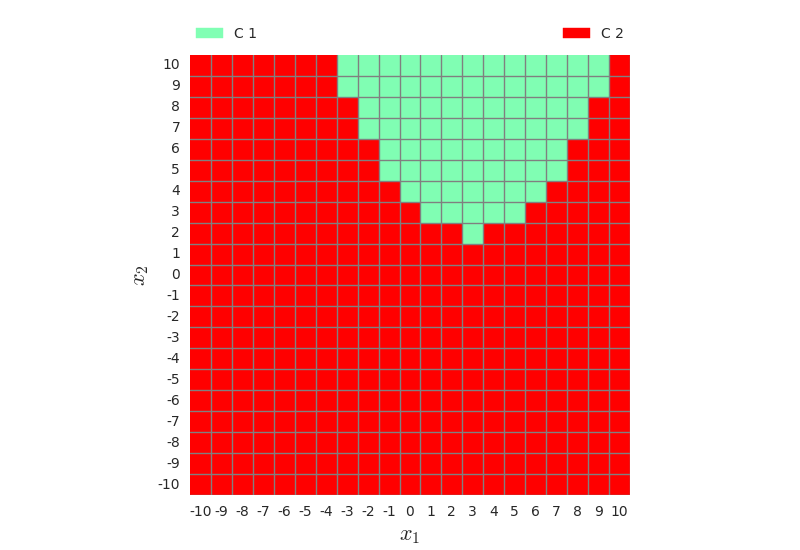

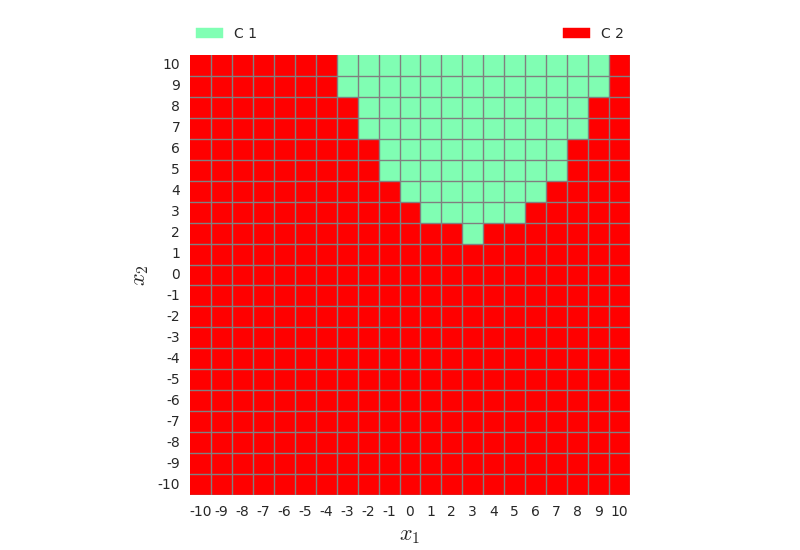

Figure 1.4 shows the classification, both done with tha analytical decision boundary (left), and the result from the multivariate gaussian classifier from week 7 (right). They are equal, and correspond well to the shape of the decision boundary.

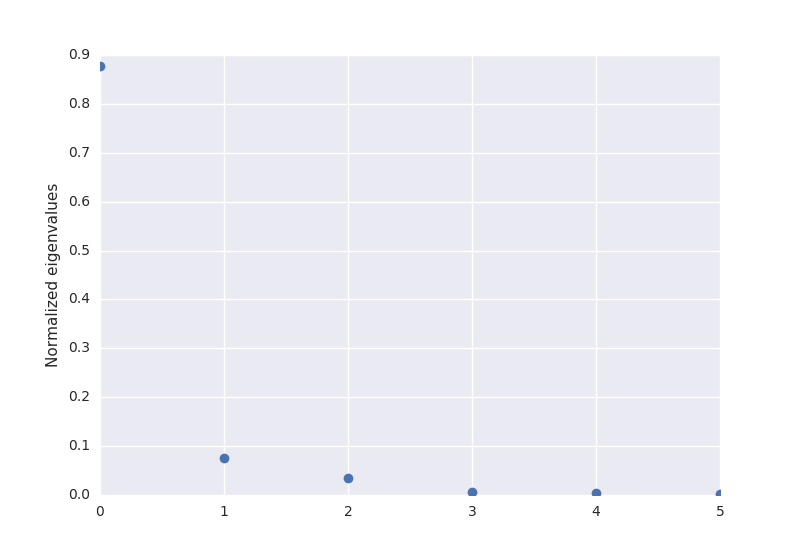

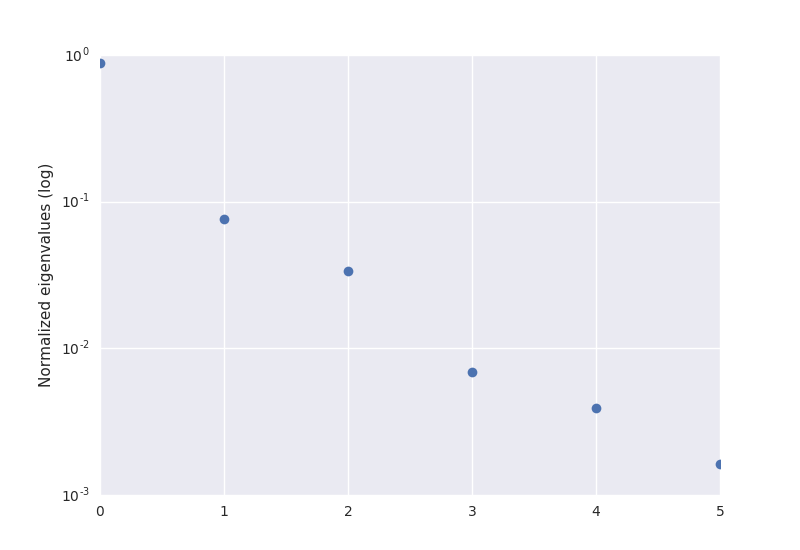

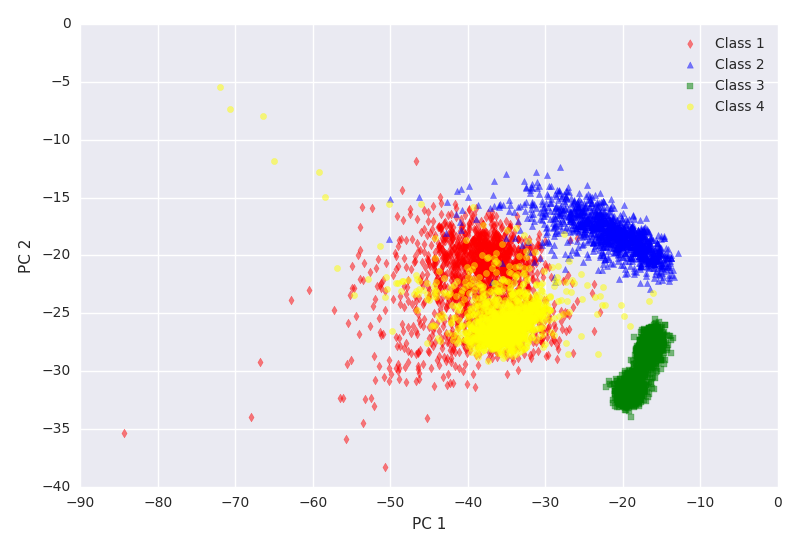

Task 2 - Principal Component Analysis

The 6 principal component images of the Kjeller landsat images.